Edge laptops

Always-on, efficient AI

NPUs stay cool handling assistants, copilots, and background perception without burning the iGPU/CPU. Users avoid the thermal throttling that plagues CPU/iGPU-only stacks.

Technology deep dive

FastFlowLM retools the entire inference stack for AMD’s XDNA-based Ryzen™ AI silicon. Instead of mirroring GPU kernels, we partition prefill and decoding into tile-sized workloads, keep KV state resident on-chip, and stream attention math through the NPU’s 12 TFLOPS bf16 fabric.

The result: up to 5.2× faster prefill and 4.8× faster decoding than the iGPU, 33.5× / 2.2× gains versus the CPU, while drawing 67× / 223× less power. We also lift the context ceiling from 2K to 256K tokens and halve Gemma 3 image TTFT from 8 s down to 4 s on the same laptop-class part.

Prefill acceleration

5.2× vs iGPU

Ryzen AI NPU prefill throughput.

Decoding acceleration

4.8× vs iGPU

Tile-aware token streaming.

Context window

256K tokens

Up from 2K in stock stacks.

Power draw

67× / 223× less

NPU vs iGPU / CPU.

Image TTFT

4 s

Gemma 3 vision, down from 8 s.

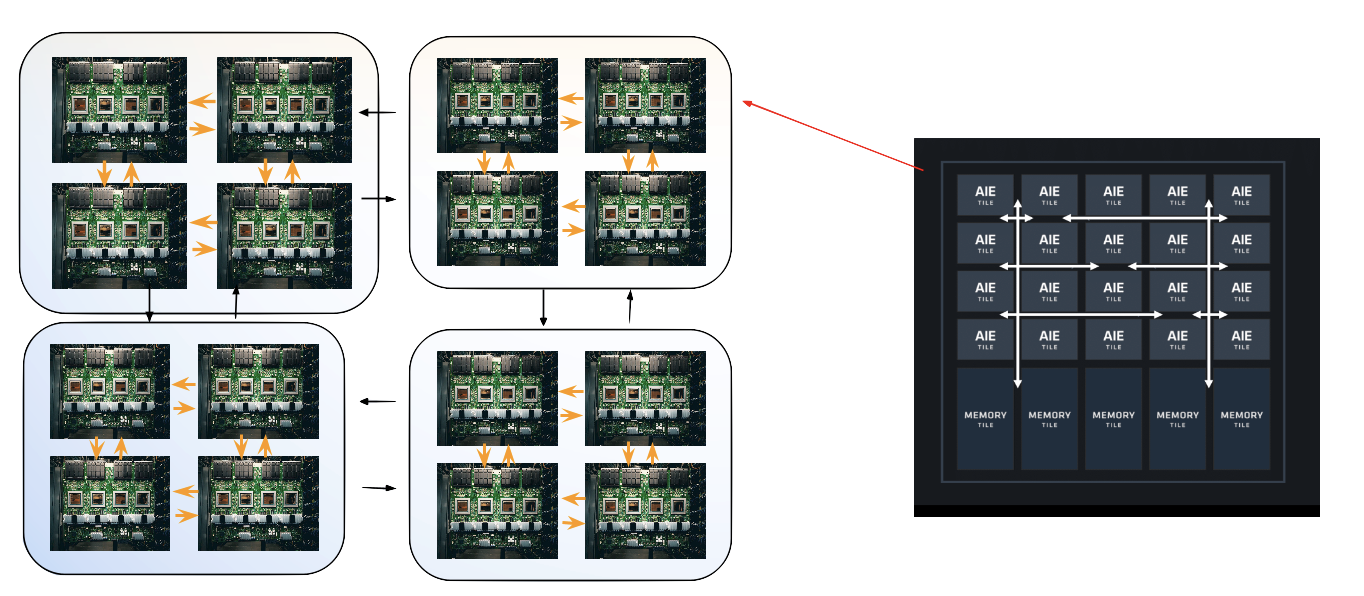

Parallel-by-design

The Ryzen AI chip blends an iGPU and an always-on NPU, both rated at 12 TFLOPS bf16 compute. While the GPU enjoys 125 GB/s of memory bandwidth, the NPU sits at 60 GB/s—so FastFlowLM had to attack the problem with software-led tiling, compression, and scheduling.

We map transformer blocks to configurable tiles, fuse matmuls + activation, and ensure the compute fabric never waits on host memory.

Attention state stays inside NPU SRAM, enabling 256K-token prompts without bouncing to LPDDR.

Always-on inference taps the NPU’s low-leakage island, yielding the 67×/223× power savings cited above.

FastFlowLM dissects inference into deterministic phases so the runtime can pipeline work across CPU, GPU, and NPU.

Token embedding, rotary math, and large matmuls are staged across contiguous tiles for the 5.2× prefill gains.

Lightweight decode kernels reuse on-chip KV blocks, hold steady at 4.8× faster than the iGPU, and avoid cache thrash.

Image TTFT drops from 8 s to 4 s by overlapping patch projection with text prefill on separate compute islands.

Edge to rack

Ryzen AI proves the concept locally, but the same architecture is already moving toward rack-scale NPU deployments.

Edge laptops

NPUs stay cool handling assistants, copilots, and background perception without burning the iGPU/CPU. Users avoid the thermal throttling that plagues CPU/iGPU-only stacks.

Rack roadmap

Qualcomm is redefining rack inference with Hexagon NPUs, while AMD is building a discrete XDNA accelerator. FastFlowLM’s close-to-metal runtime is ready for both paths.

Projected scaling

With equal compute and bandwidth, our rack NPU plan models >10.4× faster prefill, >9.6× faster decoding, and >114× better TPS/W than GPU baselines.

Rack architecture